#109- DeepSeek & ye shall

Taking the AI world by a storm

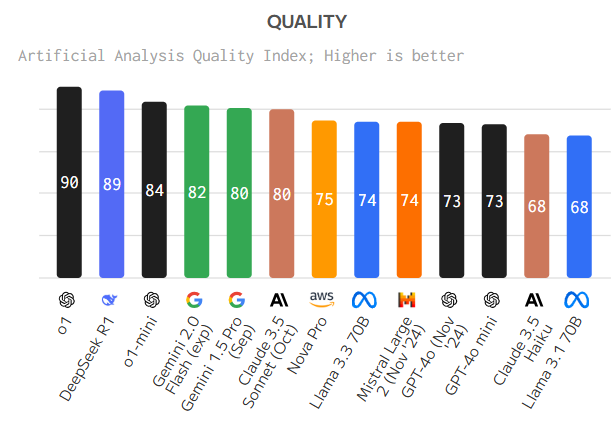

DeepSeek, a Chinese AI ‘startup’ that barely anyone in the West was paying attention to, just did something that should have been impossible. It released two models called DeepSeek-V3 (as against GPT-4o and Claude 3.5 Sonnet) and DeepSeek-R1 (as against OpenAI's O1 model) that aren’t just good, they’re at the top. On par with OpenAI’s best. On par with Anthropic’s best. And crucially, also beyond Meta’s Llama3 and Mistral, which were supposed to be the great open-source hopes.

That alone is worth stopping and thinking about. How? This isn’t some massive, well-funded company (well, allegedly!). And yet, using a little under $6mn, they’ve not only matched but arguably leapfrogged the most well resourced, most sophisticated AI labs in the world known to spend billions.

So either DeepSeek is executing at an almost superhuman level of efficiency, or something much deeper is happening here which something that forces us to rethink how we assumed AI dominance would play out.

Why is this so shocking? Because DeepSeek barely exists. It reportedly has fewer than 200 employees. It seems to have no sprawling research campus. Any ties to a decade-long pedigree in deep learning come back sparsely. The story, (allegedly) if you believe it, is that they were a quant hedge fund High Flyer, something like a Two Sigma or Renaissance, until Chinese regulators cracked down on that whole industry. So they pivoted. They took all that math and engineering talent, and the 10,000 NVIDIA A100 GPUs (interview), or maybe the toned down and export controlled H800s, and funneled it into AI research. But is that the real story? Who knows. Could be something else entirely.

This doesn’t change the fact that they just dropped two technical reports, one for DeepSeek-V3, one for DeepSeek-R1, that are so detailed, so explicit in their methodology, that we cannot just dismiss them. The work is real. The models are real. And that’s the part that I think actually matters.

These are heavy technical reports, and if you don't know a lot of it (like me), you probably won't understand much (again, like me). So I asked people I know who spoke to people they know and the summary is as below-

This model seems legit

“AI benchmarks are at times known to be nonsense. Everyone in the industry knows this. The goalposts are sifted and tests get gamed, the scores get optimized for, and what looks impressive in a controlled environment often falls apart in the real world Some models out there are like that. But not R1.”

It's tempting to treat DeepSeek's advancements as purely a technical feat, a breakthrough in model quality. But to focus only on the outcome is to miss the more subtle and, I think, more important story here- DeepSeek has in essence cracked the code on training and inference efficiency in a way that's almost disruptive.

“The claim of training with GPUs 45x more efficiently than the competition seems almost too neat and perfect. But when we peel back the layers, it starts to make sense. This isn’t just about using GPUs more effectively. They’ve been clever in ways that aren’t obvious. It is only possible when everything is very much integrated.”

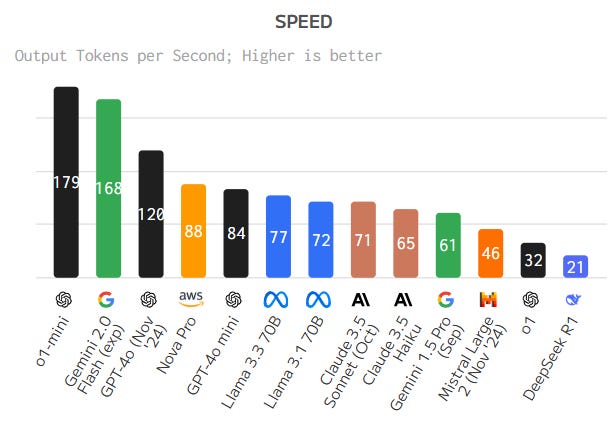

There’s something off about the $6 mn price tag for training DeepSeek-V3. Compare it to OpenAI, Anthropic, and the other giants, who are throwing around hundreds of millions in budgets for training a single model, and it’s almost laughable. I’m not saying DeepSeek's models are on the same scale yet. At least not in terms of raw capabilities like output speed. But efficiency? Efficiency is a different game entirely. And that’s the real kicker. They’re showing a new way forward, not just for AI training, but for how we think about cost and scalability in this space.

It’s easy to overlook this in the shadow of massive investments and the allure of bigger, better models. We get so focused on the end goal of bigger models, better accuracy that we forget the infrastructure, the machinery, the efficiency that can actually make those goals possible without literally bankrupting you along the way. DeepSeek might just be playing a different game than the rest of the field. And that might make all the difference in the coming years.

How is this even possible? How did this tiny Chinese company manage to outpace the brightest minds in AI having teams with a hundred times more resources, talent, and capital? Wasn’t China supposed to face GPU export bans? The technical specifics are beyond me, but here's a high level thought- maybe it’s the alleged GPU shortage that fueled DeepSeek’s ingenuity. Here’s what happened-

Necessity breeds ingenuity. Lacking the vast compute budgets and the unattainable hardware of their competitors, DeepSeek had no choice but to develop radically more efficient training techniques. But the massive efficiency turned out to be a paradigm shift.

DeepSeek has made key innovations in computation, memory, and training optimization. Their training approach to focus on FP8 vs FP32 is a major departure from industry norms. Most labs train at FP32 and then tread down, which sacrifices some model quality. DeepSeek has figured out how to train at FP8 without losing much performance. That dramatically reduces memory and computational requirements.

Multi-token prediction system- Most LLMs predict one token at a time. DeepSeek has figured out how to predict multiple tokens simultaneously while maintaining coherence. If their system works as claimed, it effectively doubles inference speed. Faster inference = lower costs.

Multi-head Latent Attention (MLA) system compresses key value indices efficiently, reducing memory usage without compromising model quality. Key value indices eat up an enormous amount of GPU memory during training and inference, so this alone could be a breakthrough.

Their load-balancing Mixture-of-Experts (MoE) architecture means they only activate 37bn of their 671bn parameters at any given time for each token during inference. This allows them to deploy a much larger model than competitors while keeping inference costs low. 37bn parameters can be run on just two consumer-grade Nvidia 4090 GPUs (costing roughly $2,000). Its akin to using a very small part of your brain so it doesn’t get fried up by remembering everything at every point in time.

One of the clearest indicators of DeepSeek's potential is the price of their API. Despite offering nearly best-in-class performance, they charge about 95% less for inference requests compared to models from OpenAI or Anthropic. The cost to value ratio is so compelling that it can’t be ignored.

Now, there’s speculation that DeepSeek might be underreporting its GPU usage, particularly around H100s, which they seem to have more of than export restrictions would typically allow. Is it possible? Sure. But I lean toward the simpler explanation that they’re just executing a really smart, creative approach to training and inference.

The arrival of cheaper yet high performing models could be a game-changer. Just think about AIs interacting at speeds beyond human comprehension. This presents an entirely new dimension for real-time applications like sales, customer service, research, arbitrage, gaming, what not. Can this be a stronger push towards more of a network of agent to agent collabs? Is that a breakthrough, or do we risk opening the Pandora’s Box?

For DeepSeek, I’d discount the cost at which they developed the R1, but I wouldn’t dare discount the ingenuity and implications on the ecosystem. And one thing is clear, the AI war has just begun…