#112- AI co-scientists

AI is generating and validating scientific discoveries, but are we in control?

Every now and then, we encounter a technological leap that reshapes the very fabric of human progress. Reading Google DeepMind’s latest paper on their AI Co-Scientist, built on Gemini 2.0, felt like one of those moments. While AI-generated art and language models have already dazzled the world, the notion of an AI-driven research collaborator that actively generates novel hypotheses, refines them through iteration & self-critique, and even validates its findings through real-world experiments feels like science fiction turned reality.

But is this truly a revolution, or just another impressive but incremental step in the AI arms race? Let’s break it down.

An AI that thinks like a Scientist?

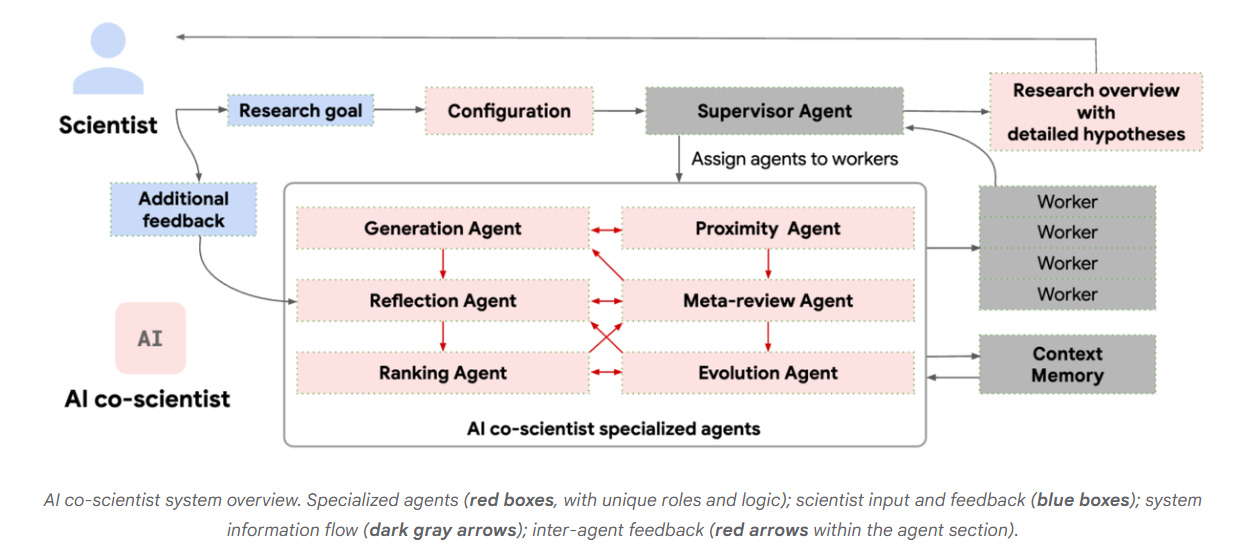

The AI co-scientist, which at first sight feels like another research summarization tool, is far from it. It actually mimics the scientific method itself. By breaking down research goals into actionable components, assigning tasks to specialized AI agents, and employing self-play-based scientific debates, it does something very human- it bloody reasons.

Traditional AI-driven literature reviews have been invaluable in speeding up research, but they often fail to propose anything truly new, novel. Google’s system actually goes a step further. It actively suggests novel hypotheses and evaluates them for quality, impact, and feasibility. This “tool” is an autonomous research assistant with the potential to generate ideas human scientists might overlook. Talk about scientific progress!

Multi-agent systems

What makes this system stand out is its multi-agent approach. Instead of a monolithic AI generating outputs in isolation, DeepMind’s model incorporates different specialized agents- Generation, Reflection, Ranking, Evolution, Proximity, and Meta-review all that interact and refine each other’s work. It’s like assembling a dream research team, except the members are algorithms rather than people.

Now this approach has some rather fancy implications. It means that AI-driven discoveries are no longer limited to predefined rules and data synthesis, they can be actively debated, refined, and improved upon in real-time. Given the increasing complexity of modern scientific inquiries, such a system could provide breakthroughs in so many different fields where human expertise is often siloed and marred with the lack of iterative imagination.

Proof of the pudding is in its original recipe..

Skeptics (including yours truly) often argue that AI-generated research is just theoretical speculation. DeepMind addresses this criticism by showcasing real-world validation. This AI co-scientist has already proposed-

Novel drug repurposing candidates for acute myeloid leukemia

Identified epigenetic treatment targets for liver fibrosis, and

Independently rediscovered key findings on antimicrobial resistance

Over-achiever much?

Now, this is a major, major departure from traditional AI applications in research. Rather than merely assisting with existing hypotheses, the system is generating original ones that hold up under experimental scrutiny. If scaled effectively, this could dramatically shorten the research lifecycle in medicine, materials science, and beyond. And I mean dramatically!

But if it walks like a human, talks like a human, can it be treated like a human? Who gets the credit?

If AI plays an active role in scientific discovery, who owns the resulting intellectual property? Will research papers list AI as a co-author? If an AI-driven discovery leads to a Nobel Prize-worthy breakthrough, does the system (or its creators) get recognition? These questions aren’t theoretical anymore! They will shape the future of academia and industry.

AI-generated hypotheses introduce a strange kind of risk. Bias, reproducibility, systematic uncertainty. Hard to know which one matters most. The models don’t invent knowledge, they just extend patterns. If those patterns contain distortions, so will the conclusions. The traditional garbage in garbage out. There’s no inherent corrective mechanism. If a flawed assumption slips in early, the AI just compounds the error at scale. Maybe in ways we won’t even notice until it’s too late. That’s the real problem. The scale and speed at which it can multiply an error. Human errors, just... blown up! Feels dangerous. But maybe not. Its really hard to say.

Anyone up for a silver lining?

Then there’s the flip side. Reimagine AI as a thought partner. Not replacing human intuition, just... stretching, twisting, magnifying, replicating it. More hypotheses, more angles, more connections across disciplines. Feels like something new. Another cognitive layer? One that doesn’t get bored or tired or stuck in loops, a perfect Sheldon Cooper? Maybe that’s what matters. Not better answers, just... more answers. More paths to walk down.

The question is whether scientists can learn to use it well. The verification step needs to be human. This seems obvious, but maybe not obvious enough. If people just start trusting AI outputs without the same level of scrutiny they’d apply to human reasoning, things could get weird fast. Not sure how you enforce that. Some fields will be rigorous. Others, maybe not so much. It really depends on the incentives I suppose.

But its clear that something is fundamentally shifting. It feels like AI is a different (better?) way of doing science. And inevitably so that it might become the new normal, the new standard, table stakes. But is standard good? Maybe. Maybe it will be woven into the process in a way we never question it. Like waking up and scrolling through Twitter or Instagram. And maybe one day we’ll wake up and realize we aren’t actually driving the ship. Just interpreting its outputs. Hard to tell if that’s exciting or terrifying. Maybe both.