#123- AWS just quietly rewrote the vector database market

and in a moment vector DBs just became a feature

#118- The AI meets DB wave is here

When I first wrote about the AI x DB shift earlier in Jan this year, it was mostly a forward-looking thesis. I was seeing cracks in the old database model which still had storage-based pricing, limited compute, legacy ops. I was reading, thinking, and spotting early signs of something new- databases built not just to store data, but to activate it.

When I published this last month, the thesis was simple: Databases are replatforming around AI. Storage is free. Compute, latency, and embedding logic are the new primitives.

But then AWS did what AWS does. It just folded a $1bn market into a line of code

Introducing S3 Vectors: the “Pinecone killer” hiding in plain sight

In typical AWS fashion, the press release above was vanilla and dry. But the substance isn’t.

S3 Vectors turns basic S3 storage into a full blown vector database:

Stores millions of vectors with no infra to manage

Runs semantic search in under a second

Costs ~90% less than Pinecone or Weaviate (For e.g. Pinecone’s starter tier costs $90/mo for 1mn vectors. S3 Vectors gives you the same for <$10 with no infra.)

Works out of the box with Bedrock, SageMaker, and OpenSearch

No tuning. No provisioning. Just plug, embed, and search. If you’re building RAG, search, or agent memory workflows, you no longer need a separate vector DB to get started. The primitives are built in.

This confirms what I’ve said before:

The DB is no longer a passive store. It’s a real-time, AI-native runtime.

S3 is now:

A vector-aware memory system

With sublinear search performance

Tied into Bedrock, SageMaker, OpenSearch

With tiered pricing, encryption, and auto optimization baked in

S3 itself is now the vector DB. And that should terrify everyone selling infra.

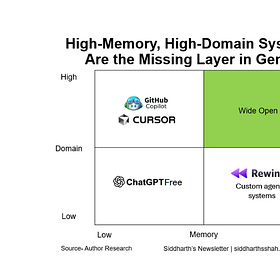

But this also sharpens the next wedge

If storage is free and infra is abstracted, what really matters?

Governance. Observability. Memory control

This is where companies now shine. Their job now isn’t to be better infra. It’s to protect organizations from what this new infra makes possible. Because if anyone can embed, search, and retrieve vectors from S3… the audit trail dies and we have literally no idea who’s accessing what.

The new questions to ask are:

Who queried this vector index at 3am?

Was this file supposed to be embedded in the first place?

Did this LLM pull something it wasn’t authorized to retrieve?

Which vectors are leaking PII or customer data?

AWS doesnt answer these. Companies building in this space will. And if they dont, someone else will - a firewall for AI memory.

MemoryOps also gets its validation moment

S3 Vectors proves what I’ve been betting on: Memory is now native in the AI stack. But memory without rules? That’s how you end up with prompt leaks, compliance risks, and invisible data misuse. And memory without visibility is legal and compliance hell waiting to happen.

So the stack now looks like:

Bedrock to embed

S3 Vectors to store

A tool to govern

OpenSearch if you need speed

The glue is trust.

What I’m watching now:

I expect a flood of

AI agents with S3 backends

Internal tools with semantic search

Pinecone, Weaviate, and ChromaDB to double down on hybrid search, realtime recall, and multimodal performance

But also expect a new stack of security and governance tools. Because now everyone has AI memory, and nobody knows how to govern it. That’s the new moat..

If you're building, infra is commoditizing, but only for standard RAG. Real-time, hybrid, on-edge is very much alive.

If you're investing, follow the memory. Not who stores it, thats gone. Look for the one who secures it

If you're competing with AWS you better be betting on abstraction or vertical depth, and may god bless you!

Insightful