#114- Automated Data Preprocessing

The AI ModelOps Trifecta - Part I

The explosion of artificial intelligence has given birth to a complex, and relatively nascent ecosystem of tools and processes to support machine learning (ML) models from conception to production. And that means, AI infrastructure is emerging as a multi-billion-dollar opportunity, particularly across an “AI ModelOps Trifecta” of pain points that enterprises face daily. This trifecta consists of-

Automated Data Preprocessing

Each of these represents a very important link in the AI value chain, often underserved by legacy software incumbents and ripe for disruptive insurgent startups. Over the next 3 posts, I will take each domain in some detail starting with its core challenges, market, key trends, competitive landscape, and the case for a venture scale opportunity. Later, I’ll also address common skepticisms and outline what it might take to build the next big thing in AI ModelOps.

1. Automated Data Preprocessing

“Garbage in, garbage out” the old adage haunts excel workbooks and AI projects alike. Data scientists routinely spend a lot of time wrangling data and by some estimates, 60–80% of their work is data cleaning and preparation. This includes cleaning messy datasets, normalizing formats, imputing missing values, engineering features, and labeling training data. The result is a huge productivity drain: highly skilled (and highly paid, and very scarce resource in India) ML experts acting as data janitors.

Enterprises are drowning in data (global data volumes are projected to reach ~175 zettabytes by the end of 2025) and racing to build AI models, yet their data pipelines are often manual and fragile. Traditional ETL (extract-transform-load) tools and hand-written Python scripts can’t keep up with the scale and complexity. This pain has given rise to a new generation of automated data preprocessing solutions aimed at drastically accelerating the data-to-insight cycle.

So what is driving this?

Several macro and technical trends are converging to make automated data prep a timely opportunity:

Data-centric AI movement: Industry leaders like Andrew Ng urge a shift from model-centric to data-centric AI, emphasizing systematic data quality improvements as the key to AI success. High-quality datasets (with consistent labels, fewer errors) can boost model performance more than fancy algorithms. This creates demand for tools which can intelligently clean and curate data at scale.

AI proliferation and big data: With AI being adopted across domains (finance, healthcare, retail, etc.), organizations are collecting massive, heterogeneous datasets. The boom in unstructured data (text, images, sensor logs) from sources like IoT and digital apps means preprocessing is more complex than ever. Manual approaches are not going to work under this sheer volume. Automating these tasks is increasingly seen as the only viable path to handle Big Data velocity and variety.

Labor and cost pressures: There’s a well-known shortage of data engineers and machine learning talent. Companies really cannot afford to have their rare ML engineers stuck on months of data cleaning (which is as much as 60% of their time). Automated preprocessing promises a step-change in efficiency, the proverbial “10x faster” data preparation, by augmenting or replacing human effort with AI-driven solutions. (For e.g., startups have demonstrated 10–100× speed-ups in labeling and preparing training data via programmatic techniques.

Advances in AutoML and GenAI: Improved algorithms are enabling automation of tasks that once required human intuition. AutoML platforms now often include automated data cleaning and feature engineering using machine learning to detect outliers or suggest transformations. Generative AI (like large language models) is also beginning to assist in data prep- e.g. describing how to parse a raw file or even auto-generating data labeling functions. These technical leaps make the “automation” in data prep far more credible today than a few years ago.

Automated preprocessing market landscape

The automated data preprocessing space sits at the intersection of traditional data integration tools, emerging AI/AutoML platforms, and open-source libraries

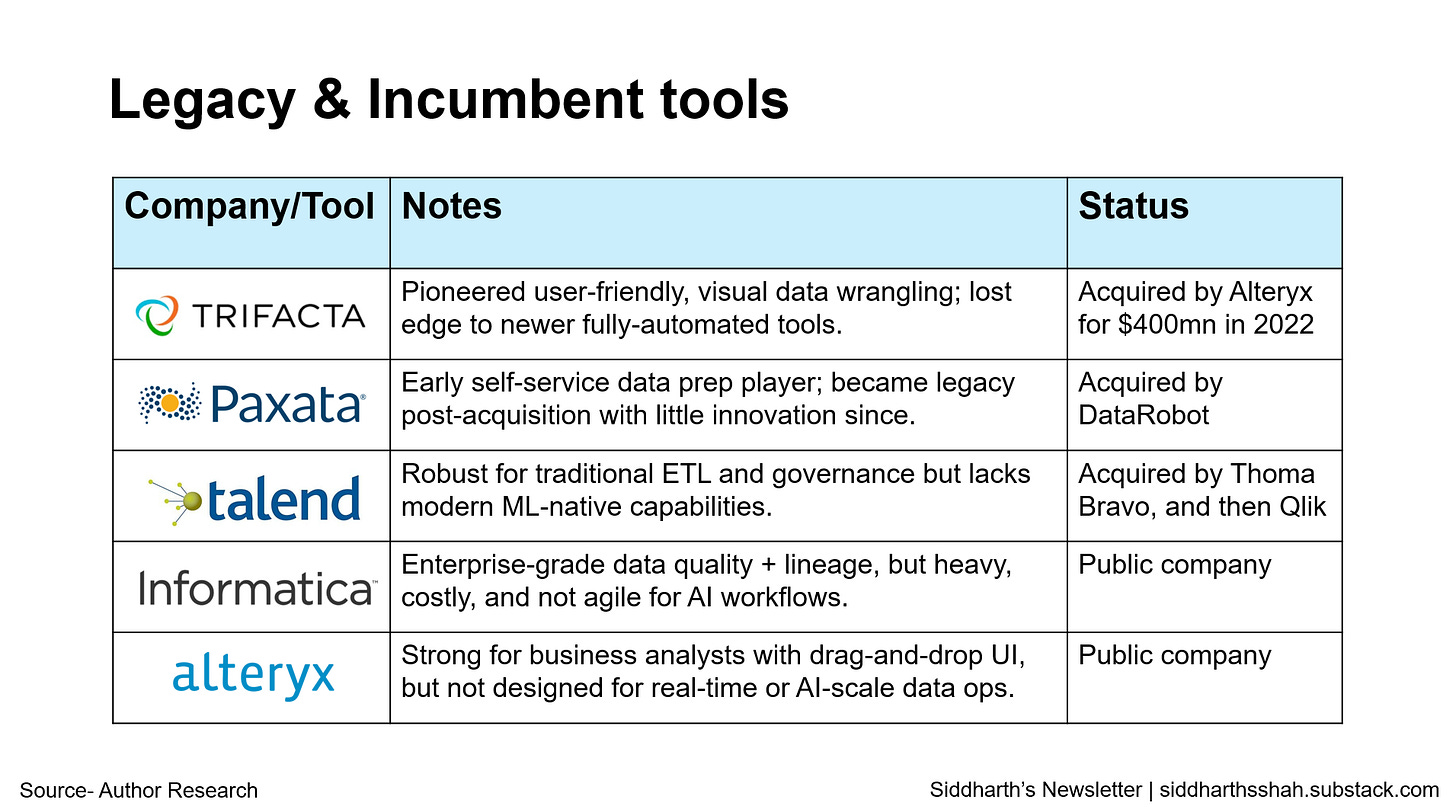

Legacy & incumbent tools- On one end, legacy data prep software like Trifacta, Paxata pioneered user-friendly data wrangling, and their acquisition by larger firms (Alteryx acquired Trifacta for $400mn in 2022) signals the strategic value of this capability. However, those tools were often semi-manual – the new wave pushes toward fully automated or AI-assisted preprocessing.

Cloud providers- Major cloud vendors have also bundled data prep into their offerings (AWS Glue DataBrew, Google Cloud Dataprep, Azure’s Power Query, etc.), often packaged with their ML platforms. These incumbents provide convenience but may not be as advanced in automation or flexibility, especially in multi-cloud environments.

Open-source solutions- These form another part of the landscape: every data scientist is armed with Pandas, some preprocessing modules, and a plethora of scripts making an DIY “open source stack” that is essentially free. There are also specialized open-source projects like Great Expectations (data validation) and Cleanlab (automated error detection in datasets) that address pieces of the problem. The challenge for startups is to offer an order-of-magnitude improvement over this patchwork of free tools. “Where’s the 10x?” is answered by automation: a platform that automatically detects data quality issues, suggests fixes, and even applies transformations could save hundreds of hours of grunt work per project. For example, Snorkel AI’s programmatic labeling approach turned months of manual labeling into hours of work by letting domain experts label via code and heuristics, achieving up to 10×–100× reduction in time.

New-age startups- Despite cloud bundling and open-source competition, startups are finding a wedge by targeting specific unmet needs. Some focus on no-code AI-driven cleaning for business analysts (e.g. Sweephy offers no-code data cleaning with AI detecting typos, duplicates, anomalies. Others, like Snorkel, tackle the labeling bottleneck with a unique approach (weak supervision), effectively creating a new category. Notable startups in this space include: Snorkel AI (data labeling automation), Scale AI (data annotation with AI assistance, recently moving into synthetic data generation), causaLens (which incorporates automated data prep as part of its causal AI platform, and various early-stage companies using AI to automate data profiling and feature engineering. The presence of hundreds of startups globally underlines both the demand and the nascent, fragmented nature of this market.

Here, cloud providers and new age startups have the leg up…

Market Potential

Estimates for the data preparation market vary widely depending on definitions, but all signal robust growth. Market research pegs the data prep software/tools segment at roughly $6–8bn in 2025 and growing ~15–18% CAGR. If we include broader spending on internal data engineering and open-source tooling (the “organic” solution many use today), the opportunity expands even more.

The core driver is that every enterprise that implements AI faces data cleaning hurdles– it’s a near-universal pain point, crossing industries and geographies. A 10x-better solution here isn’t just a good to have, it directly accelerates AI project timelines and ROI. The market is therefore plausibly atleast $10bn, especially as AI adoption grows.

Thesis

Automated data preprocessing offers the prospect of becoming the de facto gatekeeper for AI quality – a mission-critical piece of the ML stack. A winning startup in this arena would likely start with a wedge (like automating a particularly tedious task like data deduplication or image labeling) that provides immediate 10x value over manual methods.

From this beachhead, it can expand into a broader platform handling many data prep tasks (and potentially evolve into a full “data-centric AI” suite). Lock-in can be achieved via data network effects and integration because once a company’s historical datasets, cleaning rules, and labeling knowledge are codified in a platform, switching becomes painful. A platform that continuously learns from a company’s data (e.g. improving cleaning suggestions as it ingests more tables) would create a virtuous cycle, further entrenching itself. Moreover, by sitting upstream of model training, such a startup becomes part of the model lineage– every model’s quality depends on it, making it an indispensable link in the chain.

Exit

Strategic interest should be high. Cloud providers or big players in data analytics (Snowflake, Databricks, SAP, etc.) could acquire a best-in-class data prep startup to bolster their ecosystems. We’ve already seen it happen-

Alteryx acquired Trifacta1

DataRobot acquired Paxata2

Thoma Bravo acquired Qlik3, Thoma Bravo acquired Talend4, and then Qlik acquired Talend5

SAS acquired Hazy6

NVIDIA acquired Gretel7

Snowflake acquired Truera8

There’s also a path to standalone success. Given the size of the problem, an independent company could scale to IPO with a multi-product platform. Key is proving that this is a sustainable platform business, not just a one-off feature. Yes, data prep can be a real business. The proof will really be in land and expand traction. Companies starting with automated cleaning, then upselling modules for data lineage, feature store integration, etc., will be capturing more value of the AI workflow.

For now, the unmet need and broad market make this a compelling space for mid-to-long-term bets. The 10x advantage in productivity and the universality of the pain point suggest that atleast one breakout company will emerge here. Ideally hoping I’m part of its story.

In my next post, I’ll dive into Automated Data Backup & Recovery.

Trifacta Joins the Alteryx Family- https://www.alteryx.com/blog/trifacta-joins-the-alteryx-family

DataRobot Acquires Paxata to Bolster its End-to-End AI Capabilities- https://www.datarobot.com/newsroom/press/datarobot-acquires-paxata-to-bolster-its-end-to-end-ai-capabilities/

Thoma Bravo Completes Acquisition of Qlik- https://www.thomabravo.com/press-releases/thoma-bravo-completes-acquisition-of-qlik

Thoma Bravo Completes Acquisition of Talend- https://www.thomabravo.com/press-releases/thoma-bravo-completes-acquisition-of-talend

Nvidia reportedly acquires synthetic data startup Gretel- https://techcrunch.com/2025/03/19/nvidia-reportedly-acquires-synthetic-data-startup-gretel/

Snowflake Announces Agreement to Acquire TruEra- https://www.snowflake.com/en/blog/snowflake-acquires-truera-to-bring-llm-ml-observability-to-data-cloud/

The pain is real, and automation here is overdue. That said, would’ve liked more depth. For example, on how this differs from observability/metadata tools, and how “auto” plays out across modalities beyond tabular/text?

Today observability tools like Monte Carlo, Metaplane, or even databand and metadata layers like OpenLineage, Marquez, or Feast for features are becoming core to how preprocessing is validated and governed

The $6-10bil TAM makes sense if you include all ETL, wrangling, and data integration efforts. But if we’re strictly talking about ML-specific preprocessing, the addressable market narrows fast!

Still, a good thesis kickoff man!